EDIT : that feature is now available as a core module in NS !

Preliminary note : this is work in progress and comes without any warranty.

Why

While I like the general concept of nethserver’s backup strategy, I found that duplicity was not the best engine especially when nethserver is used as a file server with muli-terra files to be backuped :

- Heavy CPU load

- Awful Data throughput

- Files stored in multi-pieces archives, making it hard to restore manually and more prone to break if only one piece of the archive gets corrupted

- Necessity (why ?) to make a full backup every week at least.

- backing up 2 To of data necessitate > 24h during which the server is on his knees.

What

Coming initially from the OSX world, I found a nice script that mimics Apple Time Machine’s way of doing backups.

- Each backup is on its own folder named after the current timestamp. Files can be copied and restored directly, without any intermediate tool.

- It is possible to backup to/from remote destinations over SSH.

- Files that haven’t changed from one backup to the next are hard-linked to the previous backup so take very little extra space.

- Automatically purge old backups - within 24 hours, all backups are kept. Within one month, the most recent backup for each day is kept. For all previous backups, the most recent of each month is kept.

- The script is mature and very nicely written. There are some helpers that make possible to have multiple backup profiles / destinations.

All in all, I personally can only see advantages against duplicity that was “never built to handle big volumes of data” (sic!).

I wanted to implement this script as a simple in-place replacement of duplicity as backup engine, without breaking the rest of the backup logic of nethserver. Thanks to the modular conception of nethserver and its backup-data module, this wasn’t difficult.

No existing file has to be modified. Only one configuration property has to be changed.

How

-

Install the backup-data module and configure it as needed, then disable it.

-

Download the main backup engine script, I choose to put it in /root

cd /root

git clone https://github.com/laurent22/rsync-time-backup.git -

Download my script used to interface nethserver with the main script :

cd /etc/e-smith/events/actions/ git clone https://github.com/pagaille/nethserver-backup-data-rsync_tmbackup.git

Review the script to ensure that the options fits your configuration (it should be the case) -

Modify the configuration db to make nethserver use the new script

db configuration setprop backup-data Program rsync_tmbackup -

add a cron job to launch the backup as often as you want by adding something like

0 23 * * * /usr/sbin/e-smith/backup-datato the file/var/spool/cron/root

That’s all. You may launch backup-data and watch the magic happen through the usual log files (see backup module documentation). Everything works except the GUI : pre-backup tasks (sql dumps, config-backup, etc), post-backup tasks, custom files included or excluded, mail notifications, and even the dashboard’s backup status gets updated.

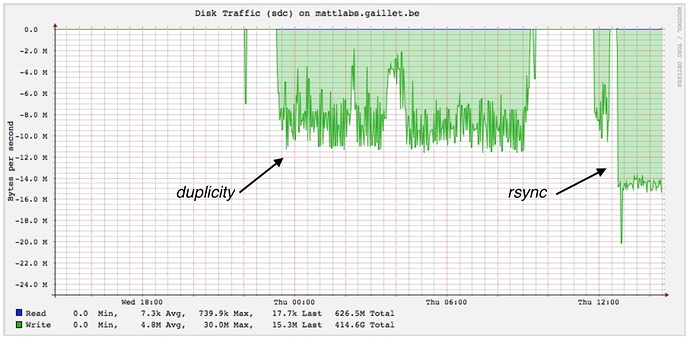

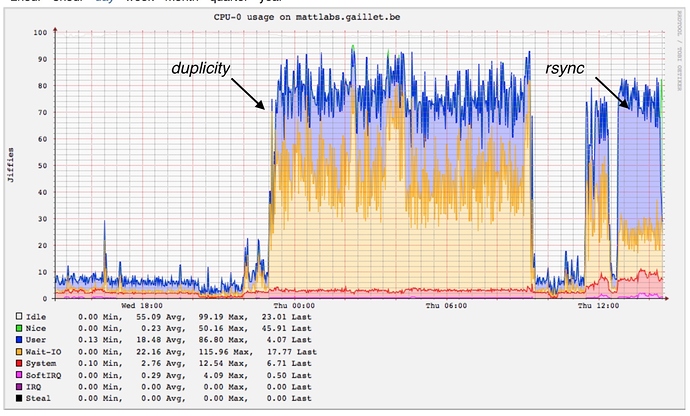

Performance Comparison with duplicity

Tests ran on a very basic 2 x Intel® Core™2 Duo CPU E6550 @ 2.33GHz with an USB2 hard disk attached for backups.

Runs almost twice as fast on big files :

Requires roughly as much CPU power, but less IO-related, which makes possible to run the process as “nice” (low priority) :

What’s next / TO DO

- a GUI should obviously be created i.e to handle the ssh ability of rsync for remote storage. Sadly I’m far from even thinking about doing this myself.

- The restore script have to be modified to ensure automated restoration works. Right now I guess that simply copying the saved files from the latest folder using something like

rsync -aP /path/to/backup/latest /onto a reinstalled nethserver should do it. - The “restore” tab for the GUI should also be updated.

- Currently the backup script backups everything each time it is lauched. That requires a great deal of ressources to dump the sql tables, etc… It could be interesting to make two cron jobs : one to make a full configuration backup, and one to backup only the file shares.

I really believe that this rsync-based script is a real plus for those that use Nethserver as a (big) file server.

I really believe that this rsync-based script is a real plus for those that use Nethserver as a (big) file server.